How are OpenAI and Disney tied together, and what strategic moves are AMD, SoftBank, and NVIDIA making?

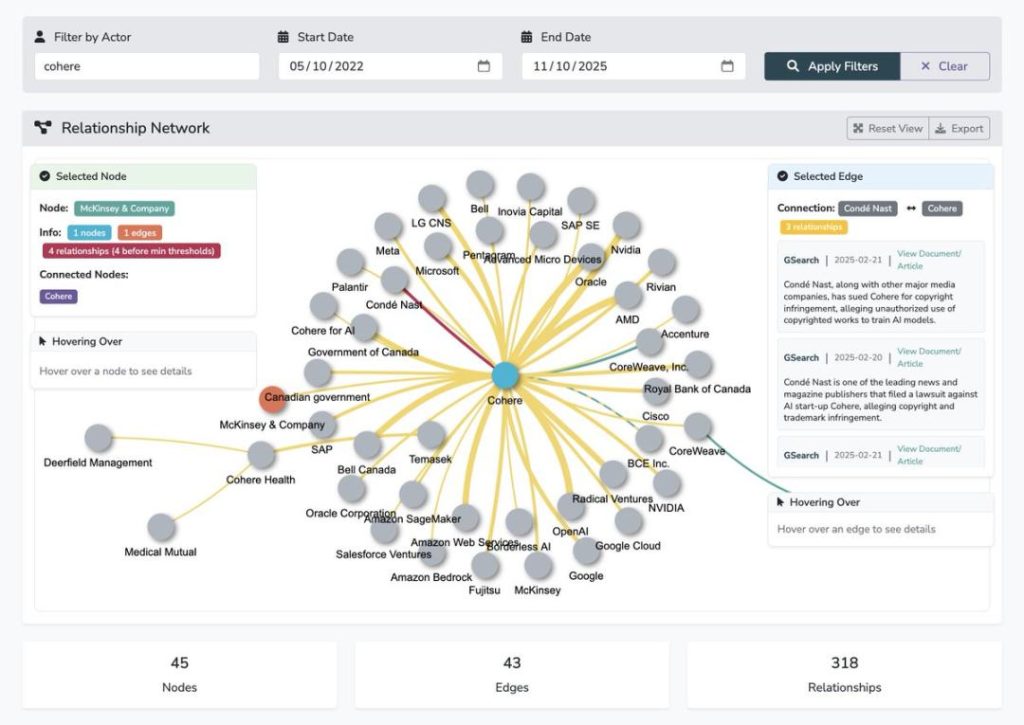

Carnegie Mellon University has just dropped a “industrial nuclear bomb”: the first AI supply chain dataset that systematically portrays the flows of data, computing power, models, capital, and even talent.

Who controls the upstream bottlenecks? Who has a stranglehold on the global AI industry?

This time, the real-world “network of relationships” among capital, technology, and power is laid bare in the sunlight.

Insights into the AI Industry Chain: Uncovering Industrial Secrets

The AI supply chain describes how AI models, data, and artifacts are produced upstream and how they are adapted and used downstream.

To build this dataset, researchers scraped thousands of articles, press releases, SEC filings, etc., and continue to update it weekly.

Market concentration in the upstream part of the supply chain creates bottlenecks, which can lead to cascading failures when product or service disruptions occur.

It may also foster monopolistic behavior, where a few large companies can set prices and contract terms at will.

This data can be used to uncover close financial relationships among AI organizations, including cross-investments and circular investments.

It can also track the use and adoption of AI across various downstream industries.

This helps in tracking issues such as AI-related harms and homogenization.

However, despite the increasingly central role of the AI supply chain in the entire ecosystem, it remains difficult to clearly depict and analyze.

Most industry reports on the market merely name key institutions but rarely portray the relationships and dependencies among them.

This project aims to fill this gap: by mapping the AI supply chain, it examines how various participants interact.

Clarifying these connections facilitates several key analyses:

Bottlenecks and Industry Resilience: The AI industry relies on several key inputs (computing power, data, talent, etc.). Bottlenecks, which are nodes in the chain with extremely high dependency, introduce vulnerability to the AI industry.

Innovation and Governance: To formulate effective AI policies and governance mechanisms, it is essential to understand the flow of knowledge, resources, and capabilities within the ecosystem. Understanding upstream and downstream dependencies is the foundation for designing interventions related to computing power, information disclosure, liability attribution, and safety regulation.

Market Concentration and Competitive Landscape: Mapping the supply chain reveals where market forces are concentrated and how competition evolves at different levels of the ecosystem.

Strategic Insights: A systematic perspective on the supply chain helps decision-makers track ecosystem evolution, identify key nodes, and anticipate shifts in strategic advantages. Clarifying the flow patterns of capital, talent, and capabilities enables better prediction and more effective intervention.

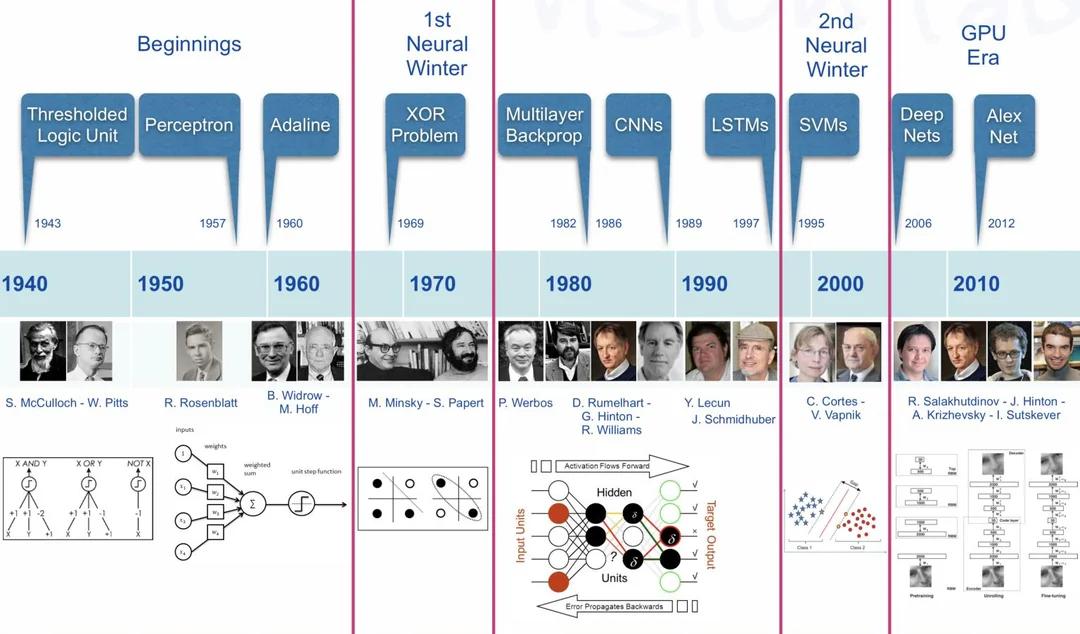

A Century in the Making: AI Finally Becomes an Industry

The gestation and implementation of AI have spanned nearly a century.

However, since the advent of large language models (LLMs), AI products and services have surged like a geyser.

LLMs enable people to interact with AI using natural language, significantly lowering the barrier to entry and allowing more people to access it, thereby propelling it into the mainstream.

The booming AI industry has given rise to the so-called “AI supply chain”:

A complex network of numerous organizations jointly involved in the research, development, deployment, and use of AI systems.

The chain includes cloud service providers, data curators, observability platforms, model developers, enterprise users, and so on.

Generally, those who provide products or services to others are considered upstream, while those who receive them are downstream.

In this light, the very upstream of the chain can be traced back to human annotators and feedback providers, while the downstream extends to end-users of various AI applications.

The Maturation of the AI Industry

The emergence of the AI supply chain itself is of great significance.

In the past, AI and machine learning systems were mostly completed internally within enterprises or independently undertaken by a few institutions: teams collected data, trained models, and built infrastructure on their own.

As some processes began to be outsourced (such as dataset collection), data services like Amazon Mechanical Turk and Scale AI emerged.

Today, with the rapid expansion of the AI industry, specialized companies have started to take over individual segments of the research and development process.

This disaggregation brings higher professionalism and efficiency, marking the industry’s maturation and opening up new spaces for innovation and competition.

If we cannot see the AI supply chain clearly, we will be led around by the nose between technological myths and commercial hype;

Once this chain is laid out—who is creating bottlenecks, who is reaping excessive profits, and who is shifting risks layer by layer—will be exposed.

Future AI governance should not just focus on a single “problematic model” but must hold the entire supply chain accountable:

Who provided the computing power and data, who drove the deployment and diffusion, who profited from it, and who bears the consequences.

Only when regulators, researchers, and the public learn to ask questions along the supply chain can AI potentially transform from a “power machine of a few giants” into an “infrastructure shared by the majority.”